AWS Lambda stands as a powerful tool for executing code without the burden of server provisioning or management. However, as applications scale and traffic surges, managing the concurrent execution of Lambda functions becomes critical.

Running Lambda at scale is a balancing act between the influx of requests and the time it takes for your lambda to process them. Let’s unravel the significance, potential pitfalls, and the strategies to configure lambda concurrency effectively.

What is concurrency?

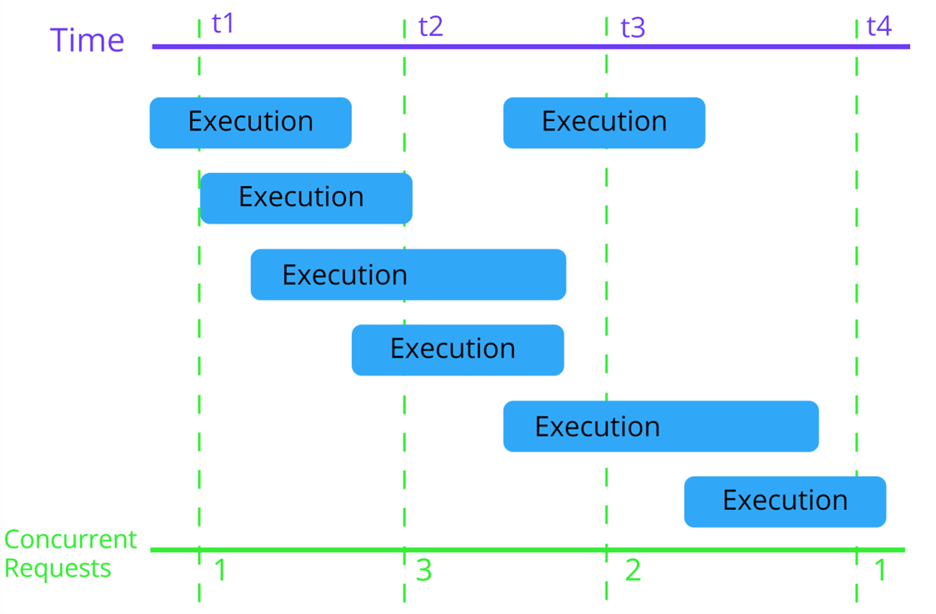

According to the AWS developer documentation, concurrency is the number of in-flight requests your AWS Lambda function is handling at the same time. The higher the concurrency, the more work your lambda is performing. Concurrency not only has to do with the number of requests you are receiving, but also the time your lambda takes to execute. For example, in the following diagram, we received 7 requests, but our max concurrency was only ever 3.

Lambda scaling pitfalls

As any programmer knows, processes that run in parallel can be fraught with potential issues. The deadliest pitfall with scaling a lambda is throttling. Throttling can reject requests to the caller, cause other lambdas in the same account to reject requests, and create thundering herds for downstream services.

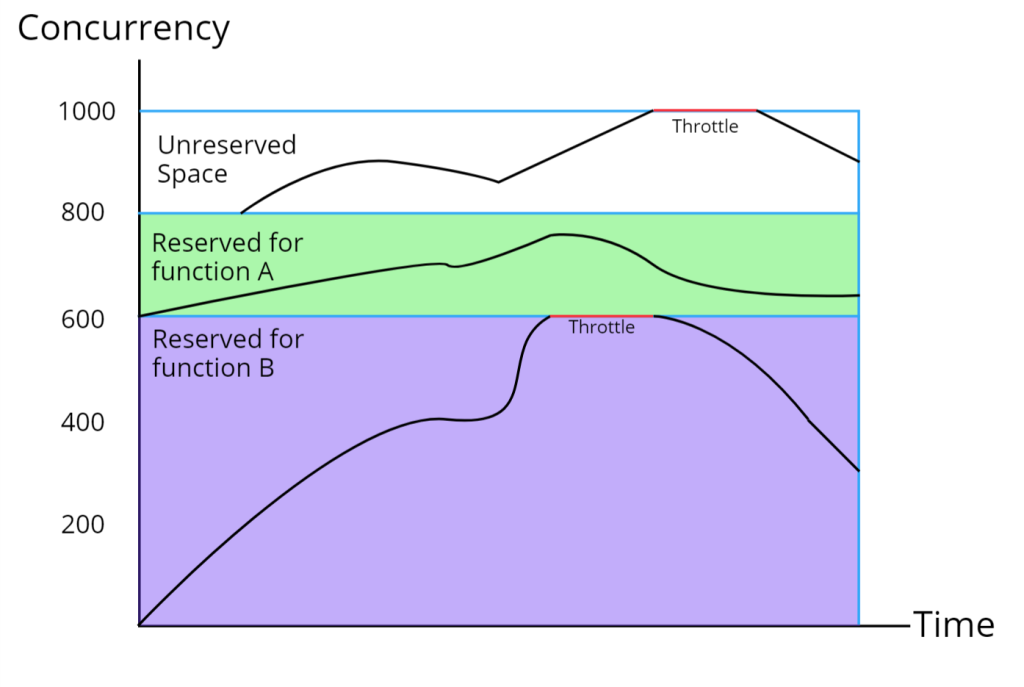

AWS accounts have a concurrency limit for all functions in a region. This is a shared pool of on-demand lambda concurrency. By default, accounts have a 1000 concurrent lambda limit. This can cause issues if business critical lambdas are impacted by less important processes consuming the shared pool. An example of this could be a chatty log enrichment lambda smothering a “checkout customer” lambda.

Configuring your lambda to avoid the pitfalls

So how can we help insulate the high priority lambdas from noisy neighbors?

AWS provides two main ways to configure your lambdas concurrency, reserved concurrency, and provisioned concurrency.

Reserved Concurrency

Reserved concurrency sets maximum number of concurrent instances you want to allocate to your function. It solves the problem of critical lambdas being throttled due to other lambdas in the same account consuming it all. This allows your function to scale independently of all other functions in your account. Additionally, it guarantees that your function does not scale out of control, since a maximum is set.

Configuring reserved concurrency will partition your total account concurrency which will lower the amount available to all the other lambdas that do not utilize reserved concurrency. If your function doesn’t use up all the concurrency that you reserve for it, you’re effectively wasting that concurrency.

Choosing the right value to reserve for a business-critical lambdas is challenging. If you reserve too little, you risk throttling due to your own restrictions. If you reserve too much, the rest of the lambdas in your account could suffer. Analyze your lambda’s historical performance to determine a baseline then monitor and adjust. Or if it is a new function, start it with unreserved concurrency,

Provisioned Concurrency

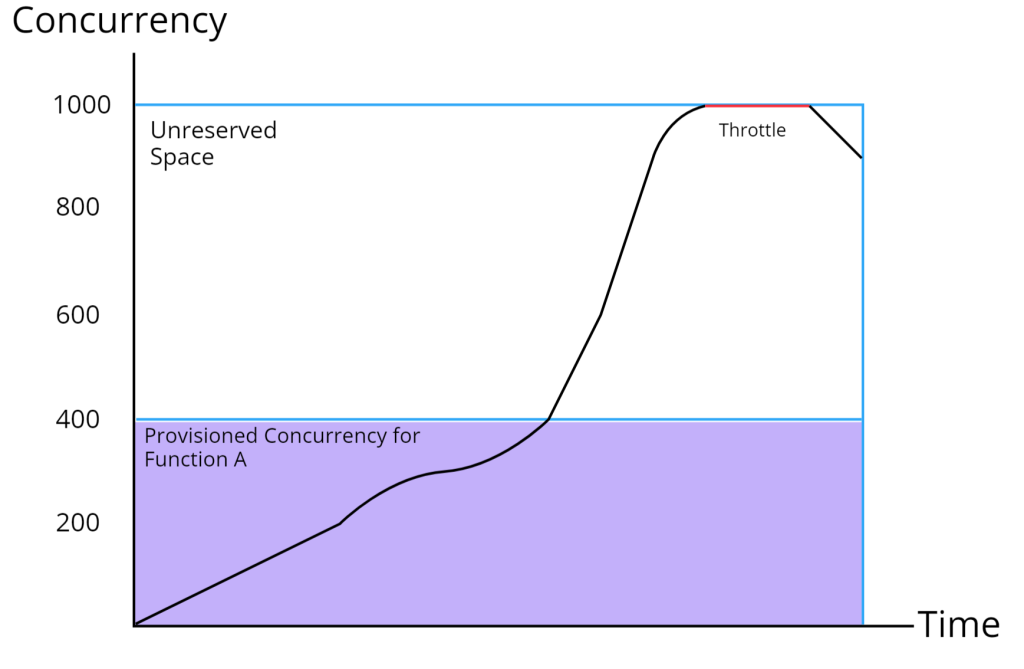

Provisioned concurrency is the number of pre-initialized execution environments you want to allocate to your function. This is like paying for dedicated servers up front, so they are ready to go when traffic arrives. Provisioning instances improves response time by removing lambda initialization time but incurs an ongoing cost even if your lambdas are not serving traffic.

Lambda’s that support API’s can utilize provisioned concurrency well. It will ensure there is always a lambda ready to serve a request. Using only provisioned concurrency does not limit your functions max concurrency.

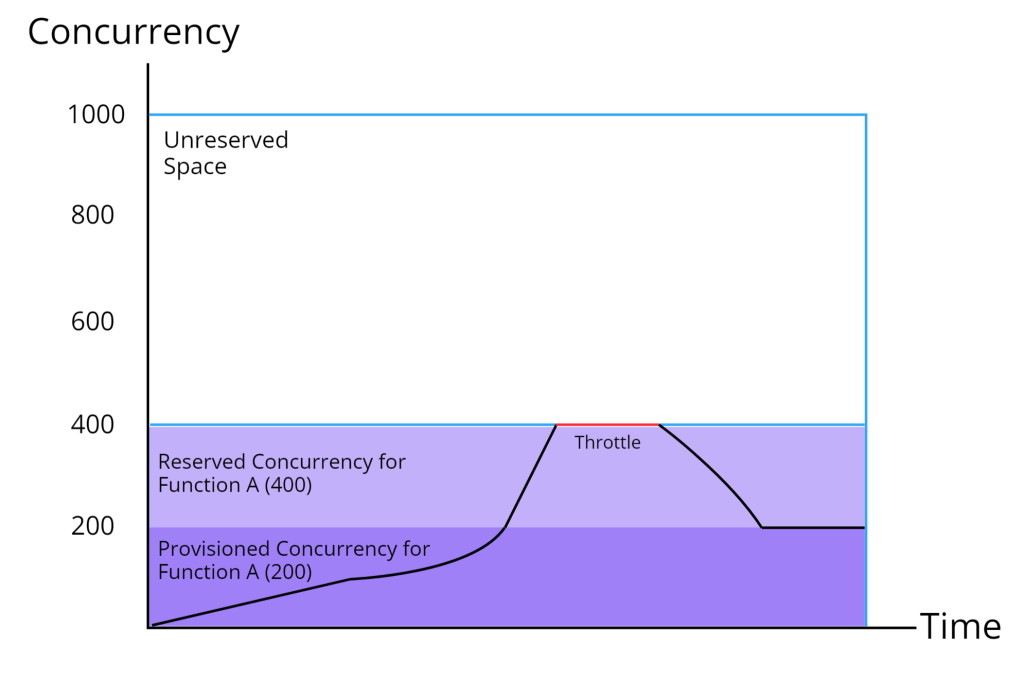

Can you use both?

Yes! Imagine a scenario where you need a few dedicated lambdas during the week to serve “business as usual” traffic but require a higher threshold for a batch job on the weekend. You would want to protect the rest of your account from the spike in traffic while allowing it to grow more than the provisioned amount.

In Conclusion

These concurrency settings hardly scratch the surface of serverless scaling in AWS. Understanding them however, will allow you to insulate your business critical processes, keep response times sharp, and reduce compute cost.

Running lambda at scale can be a delicate balancing act and requires constant vigilance and adjustment. If your business grows, or even new code is deployed it can change your performance profile which can have a big impact on how your serverless solution can handle traffic.

Don’t hesitate to reach out regarding your AWS serverless solution. We are happy to help!